LLVM 15 Compile Time With Burst

With the Burst compiler that I work on here at Unity, we use LLVM to produce highly performant codegen from the High Performance C# (HPC#) subset that we compile. We keep Burst actively supported all the way back to LLVM 10, so it means that I can always get an idea of the performance improvements (or regressions) that occur between LLVM versions.

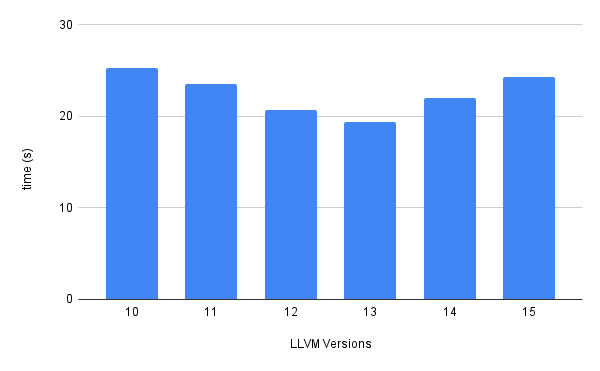

I’ve used a big internal project that amounts to about 2.5 million lines of LLVM IR after optimizations as the input for this test, and forced all LLVM versions from LLVM 10 (the oldest supported) to LLVM 15 (the latest supported).

As you can see from the chart - performance improvements in LLVM were going great until LLVM 13, and then LLVM 14 and now LLVM 15 have taken a pretty bad leap back towards the performance of LLVM 10. We use the new pass manager on LLVM versions 12 and above, so that is not the cause of this regression. We also do not use opaque pointers (our codebase relies on typed pointers for now, won’t be easy to unpick that!).

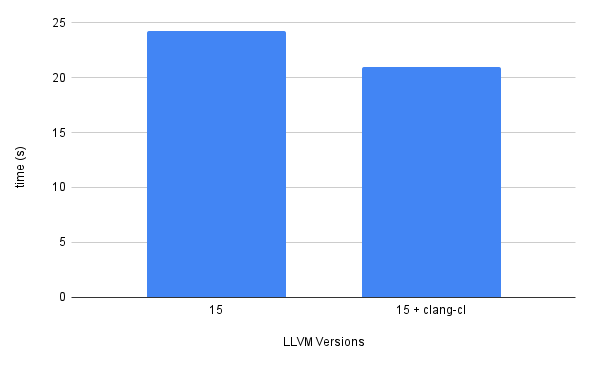

I’ve also heard people say that using clang-cl.exe will result in up to 30%

better performance as compared to using the cl.exe shipped with Visual Studio.

And yup - its true. 13% improved performance just for using the clang-based

compiler. I’ll have to do a full performance rundown and check that

debuggability is still just as good with clang-cl.exe, but for that level of

performance improvement it looks like it is worth moving to.

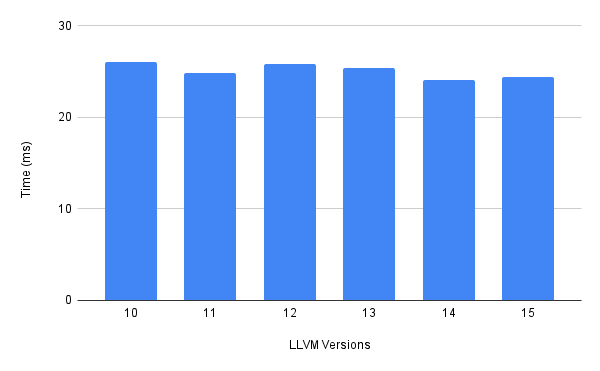

EDIT: I really should have provided the runtime performance across versions too:

As can be seen - its mostly in the noise, things got a little bit faster on LLVM 14, regressed slightly on LLVM 15, but overall not much between the versions for the significant differences in compile time.