FPSCEV - Improving the Range Analysis

In this post, we’ll improve our floating-point scalar evolution (fpscev) to do a better job at evaluating the range of some of the more complex intrinsics we analyze.

This post is the latest in a series about my experimentations with floating-point scalar evolution, you really want to read them in order:

- An Experimental Floating-Point Scalar Evolution

- Using Floating-Point Scalar Evolution to Propagate Fast-Math Flags

- Using Floating-Point Scalar Evolution to Simplify Instructions

The fpscev pass changes discussed in this post is available on github here.

Improving the Range⌗

When I wrote the first draft of the fpscev analysis, any functionality that LLVM’s APFloat did not contain I simply assumed the worst case for the range of the input (-NaN..NaN unless fast-math flags were specified). For instance, the code used to evaluate the range of exp2 was:

FPSCEV fpscev(inst.getType());

// Exp always has a positive result.

const APFloat zero = APFloat::getZero(fpscev.min.getSemantics(), false);

fpscev.min = zero;

const FastMathFlags flags = inst.getFastMathFlags();

fpscev.max = applyFastMathFlags(fpscev.max, flags);

fpse.map[&inst] = fpscev;

I could do no better because APFloat did not contain an exp2 call that I could make use of to evaluate the range. After having some twitter discussions with the rather awesome Marc B. Reynolds, I realised I should revisit my assumption on the ranges of some of these intrinsics to see if I couldn’t make a better guess than what was there.

Exp2⌗

For exponentials with a base of 2 as I’ve already stated there isn’t an LLVM APFloat function that I can just call into. There is something sort of similar that I can use though to estimate the range such that we can return something better than 0..NaN! LLVM APFloat does contain a scalbn definition for APFloat. Scalbn allows you to efficiently multiply a value by 2-to-the-power-of another value - x * 2^y. If x just happened to be 1.0 - then we are effectively calculating 2^y. This just so happens to be exp2! The one downside is that we can only specify a y that is an integer - so we will lose some precision on the range by doing this.

FPSCEV exp2Bounds(FPSCEV fpscev) {

const fltSemantics &semantics = fpscev.getSemantics();

const APFloat one = getOne(semantics);

bool losesInfo;

if (fpscev.min.isFinite()) {

fpscev.min.roundToIntegral(APFloat::rmTowardNegative);

fpscev.min.convert(APFloat::IEEEdouble(), APFloat::rmTowardNegative,

&losesInfo);

const int power = static_cast<int>(fpscev.min.convertToDouble());

fpscev.min = scalbn(one, power, APFloat::rmTowardNegative);

} else {

fpscev.min = APFloat::getInf(semantics, true);

}

if (fpscev.max.isFinite()) {

fpscev.max.roundToIntegral(APFloat::rmTowardPositive);

fpscev.max.convert(APFloat::IEEEdouble(), APFloat::rmTowardPositive,

&losesInfo);

const int power = static_cast<int>(fpscev.max.convertToDouble());

fpscev.max = scalbn(one, power, APFloat::rmTowardPositive);

} else {

fpscev.max = APFloat::getInf(semantics, false);

}

return fpscev;

}

The approach I take is:

- Round the minimum range value down to the next whole integer, the maximum range up to the next whole integer.

- Convert them to doubles rounding the values away from zero again (this only really matters in the case where we are evaluating a quad-precision number that isn’t fully representable in 64-bits of precision).

- Convert these doubles to integer (as scalbn takes an int

y). I rely on the fact that compilers generally round an overflowing double to INT_MAX/INT_MIN which will easily create an infinity/-infinity when we raise that value by the power of 2. - Then use scalbn like I stated above to create the bounds.

- Note: I only attempt this approach at all if the min/max value were finite in the first place.

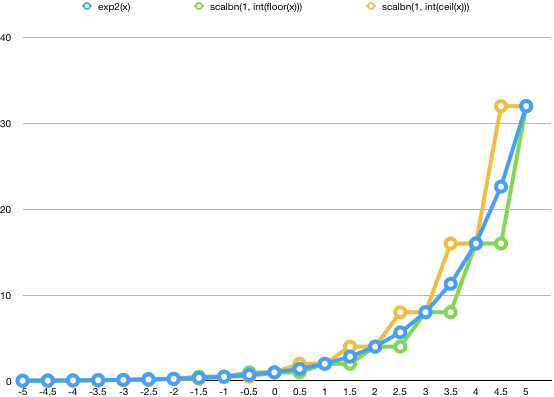

Lets have a look at the range of this exp2 value plotted across some key values to understand how accurate our evaluation is:

The chart above firstly shows that the actual value of exp2 if we could calculate it correctly lies inclusively within the calculated min/max ranges we’ve established using scalbn. So the result is always correct. You can also see that the higher the input value to the exp2 function, the more imprecise our range analysis becomes (as exp2 gets more, well, exponential the higher the input value this is to be expected).

All in all - this is pretty good! Much better than always returning a range of 0..NaN anyway.

Log2⌗

For log2 I explored a similar idea to the solution I used for exp2 above. While LLVM APFloat doesn’t have a suitable log2 equivalent function, it does have ilogb though. The ilogb function effectively extracts the exponent from the floating-point number without fudging it like which happens with frexp (frexp always returns a number between 0.5..1, and so has to fudge the exponent it returns to make sure of that). Because ilogb loses the fractional part of our floating-point number, we can use ilogb of the minimum-range to get the lower bound of our result. For the upper bound, we use ilogb and then add one onto it - this rounds up the estimation to the next highest whole number.

FPSCEV log2Bounds(FPSCEV fpscev) {

const fltSemantics &semantics = fpscev.getSemantics();

// If the full range is negative, always returns a NaN.

if (fpscev.isAllNegative()) {

APFloat nan = APFloat::getNaN(semantics, false);

return FPSCEV(nan, nan, false);

}

const APInt ilogbMin(32, ilogb(fpscev.min));

// We do +1 here so we round the range up.

const APInt ilogbMax(32, ilogb(fpscev.max) + 1);

APFloat values[2] = {getFromInt(semantics, ilogbMin, true),

getFromInt(semantics, ilogbMax, true)};

// If the input is entirely greater than zero, we can use ilogb to get a much

// firmer estimate on the log result.

if (fpscev.isGreaterThan(APFloat::getZero(semantics))) {

fpscev.min = getMinimum(values);

fpscev.max = getMaximum(values);

} else {

fpscev.min = APFloat::getInf(semantics, true);

fpscev.max = values[1];

}

// Can't be sure about this because we're getting very vague bounds, so wipe

// it.

fpscev.isInteger = false;

return fpscev;

}

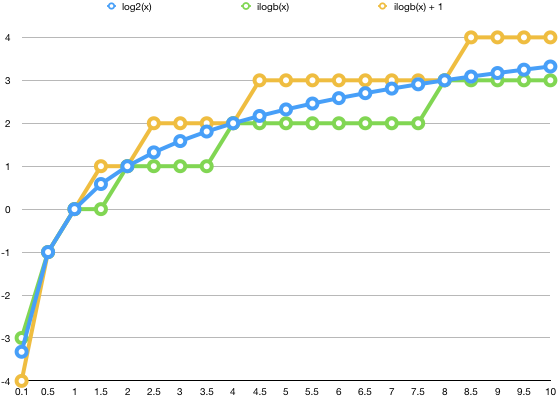

Lets have a look at the chart for this to show how good our bounds are:

As can be seen - the actual result is entirely within our calculated min/max range, and because logarithms grow so slowly the range isn’t so bad (as compared to our exponential prediction from before).

Log & Log10⌗

Logarithms have some very fun rules - one of which is the change of base formula. For those unfamiliar, any log can be transfered into any other log calculation by loga(x) = logb(x) / logb(a). This means we can use the calculation of the bounds we already discovered for log2 to calulate a bound for any other log! I use this same trick for log and log10 - so I’ll just cover log here to keep this succinct.

template <>

void FPScalarEvolutionPass::visitIntrinsic<Intrinsic::log>(

IntrinsicInst &inst) {

const FastMathFlags fmf = inst.getFastMathFlags();

FPSCEV fpscev =

fpse.getFPSCEV(inst.getOperand(0))->cloneWithFastMathFlags(fmf);

fpscev = log2Bounds(fpscev);

// We are calculating log(x), but we only have ilogb (which gives us an

// effective lower bound of log2(x)). To convert this bound into log(x) by

// multiplying it by log(2).

APFloat converter(

0.693147180559945309417232121458176568075500134360255254120);

bool losesInfo;

converter.convert(fpscev.getSemantics(), APFloat::rmNearestTiesToEven,

&losesInfo);

fpscev.min.multiply(converter, APFloat::rmTowardNegative);

fpscev.max.multiply(converter, APFloat::rmTowardPositive);

fpse.map[&inst] = fpscev.cloneWithFastMathFlags(fmf);

}

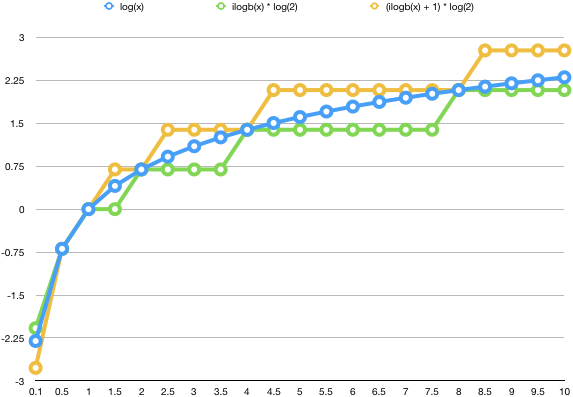

I basically calculate the log2 range of the input, and then use the change of base formula for logarithms to turn this into the bounds for log instead. Lets look at the chart:

Pretty good! The bounds follow a similar trajectory to the previous log2 calculation, but in this newer range of log.

Pow⌗

Anyone who has tried to implement pow(x, y) will know that there is a common hack that gets touted as some miracle solution to this calculation - turning pow(x, y) into exp2(y * log2(x)). There are numerous reasons why this isn’t generally a good idea (the precision loss is terrible when you do the y * log2(x), you have to handle a negative x to an integer y that might be odd/even and this affects the sign of the result, etc) - but since we have no alterative and we are ok to lose a bit of precision as long as we lose the precision correctly (EG. as long as we always grow the range through precision loss rather than accidentally shrink it).

Pow has a lot of chances to go awry (fractional bases, fractional powers, negatives) - so I play it very safe:

// For pow we use exp2(y * log2(x)) to get the best bounds we can hope for.

template <>

void FPScalarEvolutionPass::visitIntrinsic<Intrinsic::pow>(

IntrinsicInst &inst) {

const FastMathFlags fmf = inst.getFastMathFlags();

const FPSCEV xFpscev =

fpse.getFPSCEV(inst.getOperand(0))->cloneWithFastMathFlags(fmf);

const FPSCEV yFpscev =

fpse.getFPSCEV(inst.getOperand(1))->cloneWithFastMathFlags(fmf);

FPSCEV log2Fpscev = log2Bounds(xFpscev);

FPSCEV fpscevs[4] = {log2Fpscev, log2Fpscev, log2Fpscev, log2Fpscev};

fpscevs[0].min.multiply(yFpscev.min, APFloat::rmTowardNegative);

fpscevs[0].max.multiply(yFpscev.min, APFloat::rmTowardNegative);

fpscevs[1].min.multiply(yFpscev.max, APFloat::rmTowardNegative);

fpscevs[1].max.multiply(yFpscev.max, APFloat::rmTowardNegative);

fpscevs[2].min.multiply(yFpscev.min, APFloat::rmTowardPositive);

fpscevs[2].max.multiply(yFpscev.min, APFloat::rmTowardPositive);

fpscevs[3].min.multiply(yFpscev.max, APFloat::rmTowardPositive);

fpscevs[3].max.multiply(yFpscev.max, APFloat::rmTowardPositive);

fpscevs[0] = exp2Bounds(fpscevs[0]);

fpscevs[1] = exp2Bounds(fpscevs[1]);

fpscevs[2] = exp2Bounds(fpscevs[2]);

fpscevs[3] = exp2Bounds(fpscevs[3]);

FPSCEV result;

result.min = getMinimum({fpscevs[0].min, fpscevs[0].max, fpscevs[1].min,

fpscevs[1].max, fpscevs[2].min, fpscevs[2].max,

fpscevs[3].min, fpscevs[3].max});

result.max = getMaximum({fpscevs[0].min, fpscevs[0].max, fpscevs[1].min,

fpscevs[1].max, fpscevs[2].min, fpscevs[2].max,

fpscevs[3].min, fpscevs[3].max});

result.isInteger = false;

fpse.map[&inst] = result.cloneWithFastMathFlags(fmf);

}

The approach is basically:

- Get the log2 bounds of the input

x. - Multiply this by the bounds for

yresulting in 8 multiplications for the whole space of the pairings. - Feed these multiplications into the exp2 bounds.

- Then work out the minimum/maximum of all the resulting values.

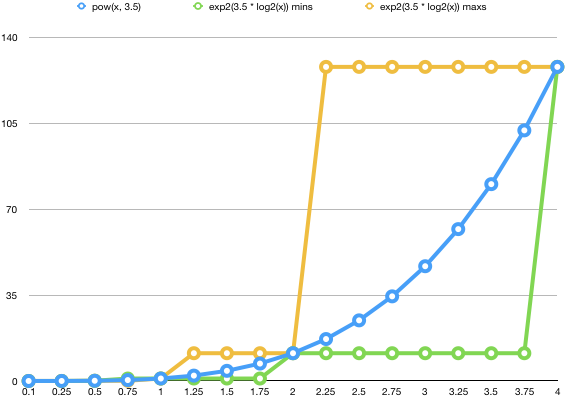

This gets us a very imprecise result, as the following chart shows:

It’s really very terribly imprecise - we are losing so much precision by the fact our exp and log are so very imprecise too. While this isn’t great - it is still better than having pow always return -NaN..NaN - at least we have some semblance of a range!

Sqrt⌗

Sqrt is one of the few functions that the C stdlib version is required to have 0.5 ulp (EG. the same precision as basic mathematical operators like + or -). This means, as long as we carefully round the min/max ranges as we translate from the APFloat to double, we can use the C stdlib sqrt implementation for our range calculation - huzzah!

FPSCEV sqrtBounds(FPSCEV fpscev) {

const fltSemantics &semantics = fpscev.getSemantics();

// If the full range is negative, always returns a NaN.

if (fpscev.isAllNegative()) {

APFloat nan = APFloat::getNaN(semantics, false);

return FPSCEV(nan, nan, false);

}

bool losesInfo;

if (fpscev.isAllNonNegative()) {

fpscev.min.convert(APFloat::IEEEdouble(), APFloat::rmTowardNegative,

&losesInfo);

// Get the next number less than the current (unless we are zero).

if (!fpscev.min.isZero()) {

fpscev.min.next(true);

}

fpscev.min = APFloat(std::sqrt(fpscev.min.convertToDouble()));

fpscev.min.convert(semantics, APFloat::rmTowardNegative, &losesInfo);

}

// If the whole fpscev is not negative, it means max must be positive.

if (!fpscev.isAllNegative()) {

fpscev.max.convert(APFloat::IEEEdouble(), APFloat::rmTowardPositive,

&losesInfo);

fpscev.max.next(false);

fpscev.max = APFloat(std::sqrt(fpscev.max.convertToDouble()));

fpscev.max.convert(semantics, APFloat::rmTowardPositive, &losesInfo);

}

fpscev.isInteger = false;

return fpscev;

}

The basic approach is that we:

- Check if the entire fpscev is not negative (meaning that min is definitely going to produce a non-NaN result).

- If so - convert the minimum value to APFloat’s double representation. This just allows us to convert to double using the

convertToDoublemethod. - If the converted value is not 0, then we move it over to the next smallest floating-point value using the

nextmethod (akin tonextafterin C). - Convert to double, use std::sqrt, then convert back, rounding towards negative as we convert back to the original semantics (ensuring we grow the min range on ties).

- Check if the fpscev is not all negative (meaning that max is definitely going to produce a non-NaN result).

- Do the same approach as for min, but use

nextto move the value up, and convert with rounding towards positive as we convert back (to grow the range).

This means we might get a slightly off result, but the result will be pretty strongly bound to what sqrt would produce.

For instance, for an input between 0..511, we produce an output range of:

min: 0

max: 22.6053104

isInteger: 0

Which is much better than the 0..NaN we have previously.

Conclusion⌗

With this improvement I’ve managed to really tighten up some of the functions I couldn’t get a good handle on previously.

In the next post I’m going to look at if it is possible to make our fpscev’s aware of where they are used, and see if we can’t constrain the range even further. Watch this space!